Download as a PDF

Foreword

Supply chains have always been essential to organizations for movement of goods and personnel during times of war and peace. Today, supply chains are receiving more popular attention than ever before. Geopolitical shifts combined with a truly global economy and modern technology make individual consumers aware of price changes and availabilities in real time.

Even the terms “war” and “peace” have taken on a less certain dimension, as cyberwarfare and psychological operations between competitor states could be an enduring feature of modern society.

The added attention and ongoing disruptions increase pressure on market participants in a new way. Those participants – companies, investors, policymakers, and more – must track policy and technology developments to stay ahead of competitors and keep stakeholders satisfied.

In this document, we focus on tactical elements that supply chain participants need to ensure their organizations are prepared for ongoing disruption. Such disruptions can come in the form of policy, competition, consumer trends, machine failures, environmental impacts – or any combination of these.

We are optimistic that better utilization of process and better harnessing of technology holds solutions for market participants. But be warned – the competition is doing it, too. Good luck!

[Editor’s note: the below was previously published in serialized form in The Supply Chain Skyline, a weekly roundup of policy issues affecting the supply chain. Those serialized versions can be found here in the editions published between October and December of 2022.]

Building Resilient Supply Chains

- Companies active in the supply chain must manage their supply chain challenges while also persuading investors and partners that they have a viable plan.

- A core element of surviving the current challenges is resiliency, which we define here as an ability to adapt to unforeseen or novel shifts.

Since 2021, the term “supply chain” has moved beyond corporate buzzword status and into daily conversation. Fallout continues from the pandemic and many other factors as firms, nations, and communities experience the consequences of delays and shortages. In addition to being a practical challenge, market actors must persuade investors, customers, and other stakeholders that they have a viable plan.

A company’s success depends on creative entrepreneurship among its leaders and workers. However, the supply chain challenge is being driven by several external factors, including the pandemic, geopolitics, regulations, infrastructure, and workforce issues. Companies need to maximize their visibility, and have a plan.

The good news for companies is that this is a moment of great opportunity. Competitors are dealing with the same issues. The companies that understand the risk factors and apply the right strategies will have a major advantage.

Resilience is another hot topic, though its definitions and metrics vary. For our purposes, we will define resilience as the ability of systems to adapt to unforeseen or novel shifts in the operating environments for which they were designed.

We will use here the definition of resilience as an ability to adapt. Systems can fail when they do not recognize a shift from “routine” to “novel” or lack the skills to adapt even after recognizing the shift. Systems should aim to:

- improve the ability to anticipate a shift;

- reduce the latency in recognizing a shift; and

- build the human and technical capability to respond.

Each of these requirements is a function of human problem-solving capability (individual and collective), technology, culture, and processes. Resilience is often conflated with risk mitigation. Beyond the idea that 1) we should identify and mitigate risk, and that 2) we should build adaptive capability, there is a third capability: 3) the ability to endure hardship while system dynamics move to equilibrium.

Many problems on a global scale are intractable for centralized problem solving. However, stability will emerge from the aggregate problem solving of collaboration. Collaboration leads to effective problem solving, where:

- There exists trust between individuals involved in the collaboration.

- The collaborators are experts close to the domain with a vested interest in long-term sustainability of the system.

Collaboration is not effective at all scales and collaboration should be carried out where and when it naturally makes sense. When discussing resilience in the supply chain domains, this is the level where we should focus our efforts. In the subsequent articles, we will discuss resilience and how existing challenges can lead to success or failure for organizations.

Anticipating Shifts: Intuition, Machine Learning, and More

- Companies active in the supply chain must make use of machine learning and other technologies, while also developing human capacities to intuit coming shifts.

- Why it matters: Harnessing the human imagination can help teams better prepare for the future and make better use of technology to track supply chain disruptions.

Risk analysis and risk mitigation have long been recognized as central to the roles of supply chain managers, operations managers, and executives. In the traditional risk management model, managers attempt to identify a hazard, assess its likelihood, and estimate an impact.

Through this traditional model, managers create robust supply chains, fortified against a set of generally preconceived or previously experienced hazards.

These hazards can include shortages of specific materials such as microchips, wood, or rare earth elements. The ongoing labor shortage is also a challenge to many organizations along the supply chain. Regulatory policies, extreme weather conditions, or constraints with physical infrastructure can also introduce new supply chain pressures.

Anticipation can be much more challenging. Anticipation is the recognition of novel emerging risk. This task requires imagination, intuition, and the ability to quickly form a narrative from a deluge of information spread across diverse source domains.

Imagination is the ability to conceive of and form a mental picture of a novel threat. While typically we think of imagination as an individual attribute, the collection of imaginations from across a system can be aggregated to form pictures of new threats. Just like genetic algorithms breed solutions to search for optimality, imagination can be combined to visualize new elements in the universe of threats. Imagining novel threats, no matter how unlikely, can be critical.

Intuition is the ability to sense that something is wrong. Intuition is a feeling that guides actions with an unknown certainty, or an ability to sense something: a type of internal or natural sensor. While mechanical sensors can collect and pass data for analysis, there is rarely a way for human intuition to be passed through the system to help that system anticipate.

Intuition can be broadly distributed through a system and touch on every domain, but requires a rapid process for collection and analysis. Recent technology trends could be interpreted as surpassing the need for human intuition via machine learning algorithms. Regardless, machine learning technology is a great tool to help harvest what employees are seeing into a usable threat signal.

Narrative Formation is the ability to formulate a coherent story from available information. This goes beyond data visualization. Understanding the emergence of a novel crisis and its impact on your system requires the ability to both analyze data collected through formal processes and incorporate murky signals collected from less formal channels.

These informal channels may be personal observations, observations of industry colleagues, news stories, economic and business indices, and even anecdotes. Like imagination, narrative formation is enhanced when carried out collectively and iteratively.

Narratives may evolve. However, for them to be useful, they must be exposed to decision makers and created within a short decision cycle. Sometimes these narratives will be unrefined, but a well-run system will not insulate decision makers from them on this basis. Decision makers may consider competing narratives, especially in the early emergence of a novel threat. Narrative formation is an important skillset for organizations to develop.

Recognizing Shifts in the Supply Chain

- Organizations should establish processes to document past and ongoing disruptions as a means of improving supply chain adaptability.

- Why it matters: Adaptability and nimbleness are growing in importance as disruptions from technology and geopolitical shifts are likely over the course of this decade and beyond.

When a system faces a novel disruption, the existing plans, procedures, and mitigation efforts – almost by definition – are inadequate. Systems that fail to recognize the novel attributes of the current disruption will apply inappropriate tools – a situation which may lead to a failure to sustain the system. Recognizing the transition and characterizing the new environment is the most basic level of system resilience, and the prerequisite for adapting.

Geopolitical shifts can be an example of novel disruptions. While great power competition is hardly novel, organizations may be slow to recognize shifts that occur after decades of a status quo.

What types of processes and mechanisms should managers design into systems to help recognize novel disruptions? There are three basic processes: baselining normal performance, characterizing past disruptions, and characterizing current disruptions.

Baselining normal performance is a matter of applying best practices in process improvement to monitor system performance against design parameters, and quality targets. Organizations and systems should have methods in place to recognize and investigate shifts in the supply chain.

Characterizing past disruptions is the process of documenting and describing attributes of past disruptions, their external causes, and their impacts on the system. This is not a one-person exercise and can be carried out as part of an organization’s knowledge management program. However, since systems consist of organizations, a true characterization of past disruptions will entail broader system and industry dialogue and collaboration.

This activity requires studying the formally-collected supply chain and operations data, but also capturing the perspectives, observations, and tacit knowledge of personnel involved in responding to the disruption. These past disruptions should be studied along several dimensions:

- Commodity. This view studies from the perspective of raw inputs: raw materials and labor.

- Functional. This view relates to the disruption from individual system functions, including marketing, manufacturing, finance, transportation, procurement, distribution, and communications.

- Externalities. This view is concerned with the broader political, economic, and environmental conditions applicable to society at large.

- Response. This view documents responses, both planned and unplanned.

Once the disruption has been studied along these independent dimensions, the interactions across dimensions should be characterized.

Hotwashes, post-mortems, and after-action reviews are common in many industries. However, we recommend a searchable portfolio of individual scenarios. Organizations should develop technologies and processes for quickly parsing and documenting disruptions while the experience is fresh, but with minimal impact on the organization’s efforts to move beyond the situation.

When faced with a scenario, organizations should be able to compare the emerging narrative with the attributes of past disruptions. If an emerging narrative demonstrates significant deviation, the organization should classify it as novel, and shape its response accordingly.

Characterizing current disruptions is the process of decomposing an emerging threat along the four dimensions discussed above. This process goes hand in hand with narrative formation discussed earlier. Characterization is a method of assessing the disruptive scenario’s novelty to assess the appropriateness of pre-planned responses.

With the right training and leadership, these three basic processes will help an organization recognize and characterize a shift in the operating environment.

Social Capital for Enhancing Adaptability

- Organizations must implement preemptive planning both on process and on establishing social capital to allow better coordination over time.

- Why it matters: Adaptability is critical, but organization need to establish relationships that will hang together in a crisis. This will make an organization more efficient in how it adapts.

Resilience can be defined as the ability to adapt to novel situations. In complex systems such as supply chains, adaptive capacity is a function of human problem-solving. The ability to adapt is a set of skills exercised in a group, often enhanced by technology. Collective problem-solving in a supply chain context is a social skill that entails wrangling and employing available resources to sustain the movement of material and information through a complex system.

Organizations may need to overcome social divisions within communities that reduce collective social capital. Developing a theory of best practices will help mitigate this. Supply chain systems are a set of activities or processes executed by interdependent organizations. These systems operate in a context of political governance and a social or cultural environment.

A firm’s social capital is a measure of an organization’s ability to access resources across the industry, political, and community domains. Resources can be in intellectual capital, goodwill, and even access to material resources outside the boundaries of the organization.

Building social capital across a system can make for more resilient and responsive systems. Increasing the access to these resources can reduce constraints, therefore opening more options for creative problem solving. Take, for example, the Port of Houston response to Hurricane Harvey in 2017.

The stakeholders operating in in the Ports along the Houston Shipping Channel engage regularly in a variety of forums. Some of these forums are formal such as the Safety Committee meeting, and some are more fraternal such as the Houston Transportation Club. Many individual actors have built rapport across firms, across industries, and across the commercial-government boundary. These longstanding personal and professional relationships create high degrees of social capital that can be leveraged to overcome a shared crisis.

After Hurricane Harvey, this capital was accessed in two ways:

- First, through formal response mechanisms such as the Port Coordination Team (PCT), led by the U.S. Coast Guard. The PCT is a local adaptation of disaster response best practices such as those outlined in the National Response Framework.

- Second, through direct communication between stakeholders. In this instance, individual interests are suspended in the short term, as resources are employed to solve systemwide challenges. This is possible because trust is pre-established, and because all stakeholders are engaged in parsing the problem and developing the solution.

While it may be relatively easy to build social capital in a port context with longstanding stakeholders and a relatively narrow geographic focus, all organizations can increase social capital. In fact, existing best practices in supply chain management can be leveraged to enhance social capital, such as supplier relationship management.

As an organization advances through the supply chain maturity model from multiple dysfunction, integrated enterprise, and extended enterprise it forms increasingly strong bonds across internal functions and across organizational boundaries. As an organization becomes more mature from a supply chain management perspective, it is likely gaining influence in the supply chain, the industry, and the community.

The reach and influence associated with a maturing supply chain is an opportunity to both access additional resources and make more resources available when a novel disruption occurs. Social capital is built through a partnership that balances system health with competitive considerations.

Scenario-Based Training for Building Resilient Systems

- Training is critical for organizations to be prepared for potential disruptions. Establishing relationship networks and talking through potential issues is necessary prep work.

- Why it matters: Life comes at you fast in a crisis. Do your planning early. Get to know colleagues at other levels as well as counterparts in other organizations up and down the supply chain.

As managerial teams develop cohesion and experience, they become effective at resolving many routine challenges through a combination of standard procedures and intuition. However, from time to time, external shifts away from routine context occur. These shifts give rise to novel situations where the organization’s tacit knowledge and procedures may no longer serve as effective tools in problem solving. Previously, we discussed the importance of imagination, intuition, and narrative formation to help systems anticipate a novel disruptive event.

Organizations will need to have internal and external lines of communication to develop best practices for training. Successful managerial teams will be able to anticipate and recognize the transition to a novel event, and quickly formulate an effective and sustainable response. In particular, adaptive capacity can be built through scenario-based training, which is meant to build confidence, social capital, and imaginative and collaborative problem-solving. This scenario-based training can be carried out at four levels:

Level 1: Single Echelon, Single Organization: At its most basic, a single level of management, for example executive-level managers within a single organization, face the challenges as a single team. This focuses on the skills required by that level of management and allows a safe space for experimentation and learning in isolation. In this level, senior managerial echelons should be involved in scenario design and observe the training.

Level 2: Multiple Echelon, Single Organization: In this level, the scenario requires coordination across multiple functional areas of an organization, and across multiple echelons of management. This level should also train simultaneously across geographies. This level still focuses on training skills sets within a managerial echelon, but also develops processes and problem-solving skills across echelons. Before conducting Level 2 training, each echelon should have conducted some degree of Level 1 training. Level 2 is still an opportunity to experiment, albeit with a more refined approach.

Level 3: Single Echelon, Multiple Organizations: This level of training is primarily for senior echelons across organizations within a tightly-integrated supply chain: those operating within an integrated or extended enterprise. This level is best-suited for organizations with established trust, looking to improve their ability to collectively tackle disruptions primarily defined by strong externalities – such as a pandemic. Level 3 should, at a minimum, follow Level 1 training and assumes that each organization is bringing a strong managerial team that can remain coherent in the face of fast-paced and complex challenges. Level 3 is less well-suited for experimentation but focuses on building trust and increasing social capital across the supply chain.

Level 4: Multiple Echelon, Multiple Organizations: This level is appropriate for supply chains that have already established long-standing trust at the most senior levels of an organization, and which have established strategic partnerships on one or more key supply chain functions. While this level of training can enhance social capital and trust within a network, it comes with higher risk as organizations are expected to pull back the curtain and share sensitive information about internal processes.

Only organizations that are part of an integrated or extended enterprise model, whose relationship is based around a strategically important and highly difficult function, should engage in this level of training. This training focuses on building trust across strategic partners by learning to leverage intellectual and materiel resources of a partner organization within the limitations and constraints of the relationship.

Conducting Scenario-Based Training

- Conducting effective training requires several elements to ensure executives and other key personnel make the best use of their time to prepare for potential disruptions.

- Why it matters: Proper scenario-based training is critical to think through crises before they happen, to ensure organizations are prepared for potential sudden changes that must occur from time to time.

In this article, we will discuss the resources needed to carry out the scenario-based training (SBT) discussed previously.

Participants: SBT represents a significant investment in time and money, where critical personnel are removed from their normal routines, and isolated for one or more days. By the nature of this training, the participants should be selected based upon their criticality to the operation of at least one entity in the supply chain. When balancing the need to include a participant with the associated cost, a leader might ask a few guiding questions:

- Does this person bring a deep level of technical and organizational knowledge that is not available from other participants

- Would communication with a subordinate functional area or part of the organization suffer irreparably if this person was not included?

- Does this person manage a functional area that needs to build stronger relationships across the organization or across partners?

Training Objective: The desired outcome of each of the SBT events should be determined by the echelon of management or leadership above the highest level being trained. If the training is at the senior executive level, the senior members of this echelon should determine the objectives. The objectives will determine the design of the scenarios.

Trips: SBT is about taking the participants on a journey of discovery. The journey consists of a sequence of “trips.” A trip is the introduction of a new dynamic to the scenario designed to compound existing challenges. As the exercise progresses, the trips create the need for advanced coordination across teams, while testing the organizations’ ability to quickly respond and adapt its processes to novel and emergent conditions. The decisions made during each trip shape the emerging journey.

Guides: Guides are the training event facilitators. The role of the “guide” team is to develop the book of trips that will guide the training audience along the desired journey. The guides introduce each trip and record the narrative as played out by the training team. The guide must allow the necessary room for experimentation and mistakes, while keeping the journey on the intended trajectory. Ideally, the guides are hired from outside the organization and spend several weeks preparing meaningful and relevant trips that are vetted with training audiences’ leadership. However, a guide can come from at least one level of leadership above the senior member of the training audience.

Role Players: These personnel assist the guides by adding context to each trip. A role player may represent an outside stakeholder such as a member of the media or a government agency. Role players can be used to introduce new information into the scenario and provide needed complexity for key moments in the training, with little added cost.

Technology: The technology stack must be tailored to the cost and level of desired integration. In most training situations, analog or tabletop training aids are sufficient to achieve the training objectives. Testing technology and communication systems is critical to high performance during a disruption. However, this can be carried out as a separate and deliberate training event. The guides in coordination with the organization’s training lead develop the training aids during the event design period.

Organizations should plan at least one month to prepare for a training event. Third party guides will need time to familiarize themselves with the operating domain, and several weeks to iterate through storyline development and trip development with the management team(s). The training audience should also coordinate schedules and meetings, as well as allocate physical space for the training event.

Joint Analytics Management Across the Supply Chain

- Organizations must establish processes to harness the best data available for their own supply chain management, and seek to improve quality.

- Why it matters: Without a rigorous system of analytics management, an organization will be functionally blind when a crisis strikes – and crises are guaranteed to strike.

Joint Analytics Management (JAM) is the discipline of capturing, storing, distributing, and analyzing data across a multi-organizational supply chain. The importance of data in modern decision-making is well established. The technology, especially cloud-based technology, continues to make analytics increasingly accessible.

While firms continue to master their own data to produce insights and support decisions, the management of shared data across organizations is the next step for many organizations. The growth of Big Data and attendant controversies can overshadow the reality that organizations must obtain and utilize the best data available in order to succeed.

Here we will discuss how organizations within a supply chain can coordinate their data management and analytics to anticipate novel disruptions and improve quality of response. This first article in this series will focus on data capture.

Supply chains that have reached higher levels of maturity, mainly integrated or extended enterprise, share data to smooth the flow of information, cash, and material across supply chain participants. Most firms operate a sophisticated technology stack that creates and captures a large swath and variety of data. This stack includes tools for both structured and unstructured data: CRM, SRM, ERP, RFID devices, GPS, event management system, and transportation and warehouse management systems.

Data may include unstructured data such as customer service transcripts, or imagery. There is also third-party data that may be captured such as textual data from media reports, or weather data. Capturing all this data requires an investment in hardware, software, and specialized skills sets. For this investment, every piece of data must be transformed and elevated to an insight that either drives revenue, cuts cost, or mitigates a crisis. When capturing data, organizations should ask the following questions to ensure a return on their investment:

- What is the useful life cycle for the data?

- What is required to make the data pipeline compatible with the analytics toolkit?

- How can the quality of the data be managed at the point of creation?

- What format is best suited to maximize accessibility of the data within the organization and across organizations?

- What data format is best suited to optimize movement of data through the pipeline and minimize query latency?

- What are the high impact decisions the data can inform

- What is the optimal frequency of moving the data to an environment accessible for analysis?

- What policies and controls will govern access to the data within an organization, and across a supply chain?

Sensors and telemetry devices for the capture and transmittal of data continue to proliferate, along with traditional data collection platforms such as points of sale. Given the diversity of data sources and types, organizations should create a flexible architecture capable of quickly adapting.

As one example, a well-designed system initially designed for traditional transactional data should be designed to quickly onboard streaming data sources such as video, or textual data such as news reports. Within the supply chain context, an organization must be thinking beyond its own internal data management. It must also creating the policies and architecture to capture it in a shared domain.

Analytics Coordination Teams

- An informal or formal coordinating team with representatives from different organizations that all work within a particular domain, such as a port, can be a reservoir of scenario planning in the event of a crisis.

- Why it matters: Laying the groundwork for extraordinary coordination can be an essential timesaver when a crisis such as a war or a natural disaster strikes.

In the ports along the Houston Shipping Channel, there exists an informal organization called the Port Coordination Team (PCT). This team is made up of many port stakeholders such as harbor tug companies, shipping companies, the U.S. Coast Guard, the U.S. Army Corps of Engineers, and terminals. Each member brings a set of unique interests, resources, and capabilities.

Although this is an informal group, there are well-rehearsed protocols and standards of behavior and engagement that guide the operations. The PCT serves as a pre-existing and generally inactive node that can be activated for collaborative problem-solving when a common crisis such as a hurricane or oil spill occurs. The PCT is a mechanism for the allocation and deployment of social capital. This concept inspired the idea of an Analytics Coordination Team (ACT).

Ports are a particularly timely area for consideration of better coordination methods, particularly in the U.S., as they feature in many of the most intense areas of increased supply chain pressure.

An Analytics Coordination Team is a pool of shared third-party resources which operates and maintains a shared analytics infrastructure and coordinates cross-organization data management and analytics through the liaisons. The ACT has several roles:

- Maintain the infrastructure for sharing data.

- Develop new pipelines for shared data compatibility across organizations and tools.

- Perform real-time analytics to monitor the supply chain for warning signs and anticipate threats.

- Coordinate data request and access controls across supply chain organizations.

- Coordinate with in-house analysts to share insights across the supply chain.

Sharing resources, talents, data, and infrastructure across organizations can come with ambiguity around roles and responsibilities. The ACT adds clarity by serving as trusted third party tasked with focusing on the shared resources and problem sets. Stakeholders can negotiate custom funding arrangements for the coordination team and shared assets. Beyond serving as a trusted third party, the coordination team delivers multiple benefits:

- A universal view of the supply chain, and a focus on the shared challenges.

- A non-biased view focused on monitoring and analysis of supply chain health.

- Cost-sharing of sophisticated and expensive engineering, governance, and analytics resources.

- Access to these resources by the most budget-constrained members of the supply chain.

In the event of a crisis, the individual organizations can leverage the ACT infrastructure as a focal point of data driven coordination. Like the PCT in Houston, an ACT can expand and contract based on supply chain conditions. The ACT should be forward-looking, constantly scanning for threats on the horizon, and constantly shoring up its architecture to ensure data and communications are protected.

As part of this forward-looking and supply-chain-focused function, the coordination team must be working with unstructured as well as structured data. Although political and economic risk are best assessed by professionals with specialized knowledge, these assessments can be enhanced with analytics tools designed to identify triggers in textual or other unstructured data. The specialty skillsets required to execute this type of analysis are expensive and well-suited for consideration as a shared resource across a supply chain.

The ACT should be governed by a committee of CIOs and CTOs from across the supply chain. In a global supply chain, there may be added risk embedded within the coordination team itself, and the structure of this team must be regularly reevaluated.

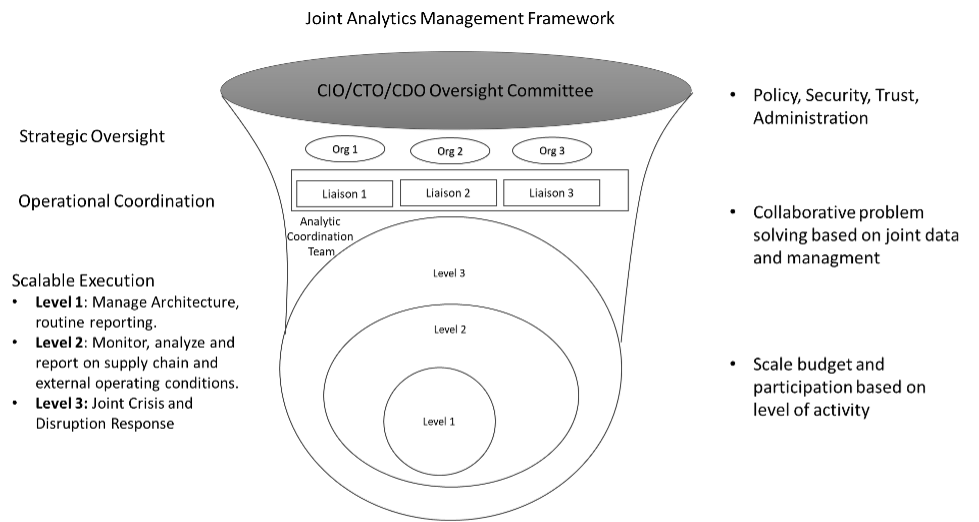

Imagine a supply chain that includes suppliers or manufacturers in East Asia who participate in the Joint Analytics Management program of a supply chain (see Figure 1). If a war were to occur in this region, not only would the supply chain be impacted, but the structure of the shared architecture and resources could pose a threat. The governing committee role is to ensure that the coordination team is structured appropriately, and that the benefits outweigh the cost and risk.

Template for Joint Analytics Management

- The practices suggested in this series on supply chain management also require that technical tools be set up to provide the humans in the loop with the analytics they need to effectively respond to changes in the operating environment.

- The right tools for the right job, appropriately segmented and dedicated to individual organizations within the supply chain, can mean the difference between success and failure in a crisis.

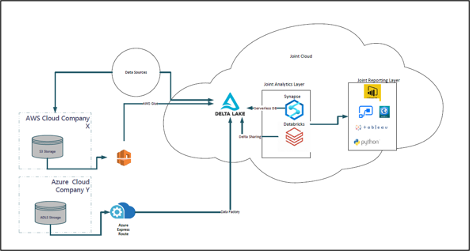

A joint analytics environment is defined as a shared cloud environment, where data relevant to overall supply chain health and sustainability is stored, secured, analyzed, and visualized by an Analytics Coordination Team and the supply chain at large (See Figure 2). There are several key components to a robust joint analytics environment:

- A joint cloud storage label where data deemed data relevant to multiple supply chain actors is available in a low latency format. In the image below, this is represented by the Delta Lake, a virtualized data structure using an open-source format such as parquet.

- A cloud-based analytics toolkit including ML/AI development tools with integrated access to the storage layer.

- Data pipelines for moving data into the joint architecture, from multiple cloud formats (i.e. AWS, Azure). Most cloud providers have specialized tools available for streaming data, IOT, or telemetric data.

- An access control layer to control access both within the joint environment as well as the induvial organizational environments. This can be achieved through active directories, and data virtualization executed through elastic data pools.

- A security layer to prevent malicious access.

In one example, data from two organizations within the supply chain would operate distinct cloud environments, with different technology stacks. Relevant data is moved in parallel directly to organization-specific clouds as well as the joint cloud, reducing the need for duplicative copying of the data. When designing the specific technology stack for the joint cloud there are several key considerations:

- Scalability: Can new organizations be on-boarded efficiently and effectively as supply chain maturity increases

- Compatibility: Are the BI and analytics tools hosted on the joint cloud compatible with organization-specific tools and data formats?

- Right-sized: Are the selected capabilities compatible with the level of organizational analytics and data governance maturity? If not, how does the supply chain intend to align its maturity level with the analytics and governance capabilities of the joint environment

- Cost: How do we factor in all direct costs of the architecture including maintenance, operation, and training?

- Personnel: Is there a readily-available labor market for the selected technology stack?

- Support and Innovation: Does the selected technology stack come from innovative and supportive vendors with the ability to continuously provide well-managed and cost-effective upgrades to the newest cutting-edge capabilities?

For such a joint analytics environment, these are some basic considerations when collaboratively determining a technology stack.

Developing a Joint Analytics Management Program

- Organizations wary of potential supply chain disruptions should consider reaching out to partners to form a joint analytics management program.

- Working closely together will help develop both the tools and the relationship ecosystem to weather crises when they occur.

Setting up a joint analytics management program amongst supply chain partners can be a relatively simple task if the right leaders are involved from the foundational moments through execution. As discussed previously, the joint analytics management program consists of an organizational component and an architectural component.

Such programs can also be critical for industry groups under pressure from policymakers to get hold of a supply chain or other systemic challenge. Industry organizations want to be able to demonstrate that they have a coherent plan, on which they are executing, to tackle current and future challenges that may impact the broader community of stakeholders.

The oversight committee is the accountable body that collectively shapes and monitors the program. This committee consist of a combination of Chief Data, Technology, and Information officers who possess strong domain knowledge of their firm’s contributing activities to the supply chain as well as strong technical expertise. This group must negotiate and direct all key areas of the program:

- Agreeing to the principle of an Analytics Coordination Team (ACT).

- Determining the composition and budget of the ACT.

- Negotiating and establishing the shared cost framework.

- Developing the protocols for flexing the ACT.

- Coordinating scenario-based training of the ACT for disruption response across the four training levels discussed previously.

- Establishing protocols for including data in the joint environment.

- Developing a process for assessing the technology stack and vendors.

- Developing a process for prioritizing and monitoring data management and analytic efforts.

- Continually monitoring the performance of third-party service providers on the ACT.

- Preventing and resolving inter-organization trust shortfalls and tensions.

The members of the oversight committee must combine tech savvy, negotiations skills, and foresight. A good oversight committee will provide not only strategic guidance, but also the level of expert oversight that can only achieved by engaging directly with operators.

Building a Joint Analytics Management Architecture

- The right approach for prep work on supply chain resilience is critical. Supply chain executives need to consider design; testing; and operations as key separate phases.

- Why it matters. Building your analytics approach from the ground up is a necessary strategy for large organizations with complex supply chains. When crisis strikes, there will be plenty of flying-by-the-seat-of-your-pants – but you want as many tools in place beforehand as possible.

Analytics and data management across a supply chain is carried out by an Analytics Coordination Team (ACT) in a joint analytics management environment. This joint environment is a cloud-based hardware and software stack designed to monitor the supply chain while providing decision support and crisis/disruption management.

Because this environment receives data from multiple organizations to create a common operating picture and decision support, designing and building the architecture requires a thoughtful and deliberate process.

We propose a three-phase model for architecting and building out the joint analytics environment. This development model relies on a waterfall approach early in the design phase and later converges to an agile approach. This ensures a robust strategic design and prototype, while delivering incremental gains in the implementation phase.

Phase 1: Enterprise Architecture Design: In this phase, a joint architecture committee is formed with the sole purpose of identifying requirements and building out a joint analytic management environment. This phase is carried out by a team of IT specialist and architects from across the supply chain organizations. This effort is guided by a lead architect. A subject matter expert (SME) can be appointed by several methods.

1) Dominant stakeholder selection. The lead architect can be selected by the dominant stakeholder or ‘channel master’ in the supply chain. In this method, the organization to which others are converging is best positioned to lead the effort.

2) ACT hire. A third-party SME can be hired as part of the ACT. In this method, the third-party lead can be a fully-dedicated and unbiased design lead.

3) Peer selection. The lead architect can be selected from among the organizational architects and IT SMEs. This method is lower-cost, like the first method, and has the added benefit of fostering trust and collaboration among designers since the lead is selected based on his expertise by his peers.

Designers may consider adopting a 2×2 mindset. This approach has two principles.

- Ensure that the next two generations of supply chain participants can be on-boarded and off-boarded as the supply chain matures. This ensures the system is flexible enough to scale as the supply chain includes more organizations operating in the Joint Analytics Management (JAM) environment.

- Ensure that the technology can remain effective through two generations of moderate technology developments without a complete architectural overhaul. While it is difficult to predict the future of technology, designers should attempt to remain as cutting edge as possible to ensure that the JAM environment delivers a competitive advantage and delivers an ROI within its expected life cycle.

There are five main steps in this phase:

- Inventory and assess each organization’s data management architecture: While some may have little formal architecture in place, others may use competing cloud platforms or data management technologies.

- Conduct a security impact assessment: Since each organizational architecture will be connected to the JAM environment, the design team should assess the security of each environment to plan risk mitigation efforts, and to set standards that must be achieved before onboarding the JAM environment.

- Inventory analytic tools, reports, and projects: It may be able to support existing products better from the new environment, and to leverage them for the supply chain. Additionally, existing tools may have a user base that needs to be considered when architecting the new JAM environment.

- Determine technology stack: Decide on the technology and vendors that will be used to build the JAM environment, accounting for known and anticipated integration requirements. This step determines how components fit together, the licensing requirements, budget, and security. When determining the software, the design team should attempt to negotiate licensing agreements that can be reassessed during prototyping if the software doesn’t meet the supply chain needs.

- Obtain permissions to prototype: Once the technology stack is determined and vendors are selected, the design team should obtain the budget and administrative permissions to begin prototyping. Prototyping is an important step, based on agile principles aimed at incrementally delivering value.

Phase 2: Prototype and Test

The main point of prototyping the new JAM environment is to ensure that all the data pipelines and tool integrations are accounted for and working. There are three main tasks in this phase.

- Go Agile: Establish a multi-organizational, enterprise-architecture-scaled agile delivery train. This well-established and familiar framework enables planning and development efforts. At this point the design team should include other stakeholders into the planning process, such as analytics teams, existing system administrators, and application experts. These stakeholders will provide the business cases and test cases for the architecture.

- Plan: The design team should develop a plan for deploying each component of the technology stack. For each module onboarded, a test is planned to support incremental business value and avoid compounding on unknown technical debt. The plan should include coordinating data samples to test pipelines and analytics tools, as well as establishing data formatting standards across the pipeline.

- Deploy and Test: The engineer team deploys and tests the technology stack. The details of this task will differ, depending on the architecture, but this step should also include basic data structuring such as deploying a schema, virtualizing the data, and setting up access controls.

The prototyping provides an opportunity to validate the design and, if necessary, revisit design decisions. Deliberately adding subsequent layers to the architecture should only be done once the interaction between existing layers is validated.

Phase 3: Operate

Once the prototyping has resulted in a viable JAM environment, the ACT can begin operating on the environment. This includes migrating data into the data lake, developing a data model, developing visualization, performing analytics, monitoring supply chain health in real time, and using both internal and external supply chain data to anticipate emerging threats. The JAM environment can also be used to perform scenario-based training to prepare collaborative responses to disruptions.

A well-thought-out and deliberate approach to joint analytics management in a supply chain can help an organization build the adaptive capacity needed to successfully collaborate and navigate through a complex supply-chain-wide disruption. We hope this will serve as a high-level framework for leaders. A successful implementation requires these leaders to establish intent, expected outcomes, and priorities and then letting a team of subject matter experts collaborate and execute, while periodically validating alignment with leadership intent.

About the Authors

Domenico Amodeo holds a PhD in Systems Engineering and is an officer in the U.S. Army Reserve, where he commands a transportation battalion, with previous service in Iraq. He is currently a consultant for Accenture.

Loren A. Smith, Jr. is the Executive Director of the Knudsen Institute. He previously served as an appointee at the U.S. Department of Transportation and the U.S. Department of Labor. As an investor research analyst at Capital Alpha Partners from 2009-2016, he published more than 500 research notes on policy affecting the supply chain.